According to Matt Drance, Frameworks Evangelist at Apple, Elvis has left the building, and he took the last WWDC ticket with him. Hope you got your tickets, it gonna be swell.

Posted in: WWDC

Posted in: WWDC

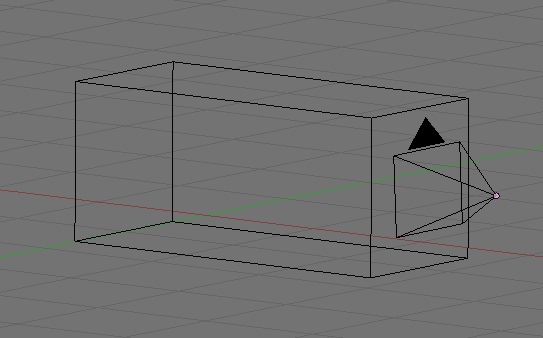

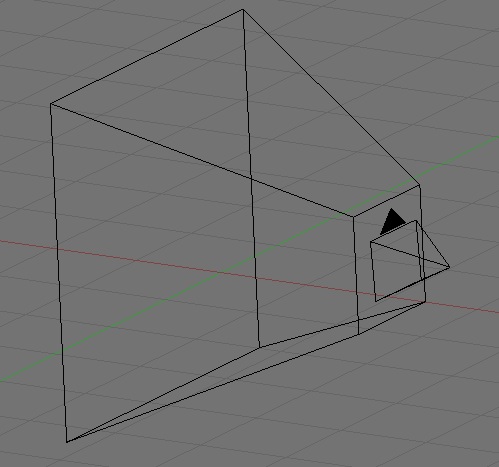

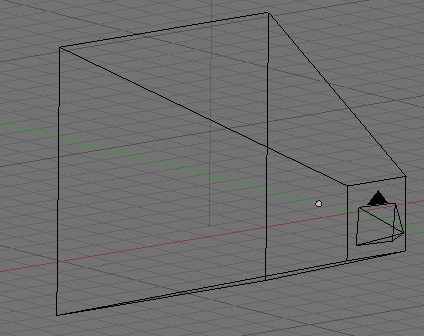

CGRect rect = view.bounds;

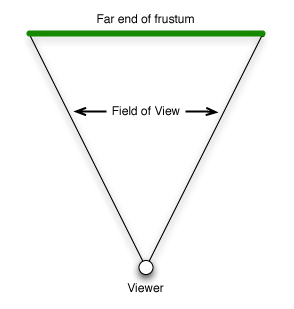

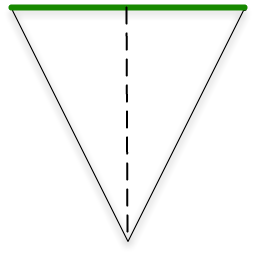

glOrthof(-1.0, // Left

1.0, // Right

-1.0 / (rect.size.width / rect.size.height), // Bottom

1.0 / (rect.size.width / rect.size.height), // Top

0.01, // Near

10000.0); // Far

glViewport(0, 0, rect.size.width, rect.size.height);

CGRect rect = view.bounds;

GLfloat size = .01 * tanf(DEGREES_TO_RADIANS(45.0) / 2.0);

glFrustumf(-size, // Left

size, // Right

-size / (rect.size.width / rect.size.height), // Bottom

size / (rect.size.width / rect.size.height), // Top

.01, // Near

1000.0); // FarNote: A discussion of how glFrustum() uses the passed parameters to calculate the shape of the frustum going to have to wait until we've discussed matrices. For now, just take it on faith that this calculation works, okay?

- (void)drawView:(GLView*)view;

{

static GLfloat rot = 0.0;

static const Vertex3D vertices[]= {

{0, -0.525731, 0.850651}, // vertices[0]

{0.850651, 0, 0.525731}, // vertices[1]

{0.850651, 0, -0.525731}, // vertices[2]

{-0.850651, 0, -0.525731}, // vertices[3]

{-0.850651, 0, 0.525731}, // vertices[4]

{-0.525731, 0.850651, 0}, // vertices[5]

{0.525731, 0.850651, 0}, // vertices[6]

{0.525731, -0.850651, 0}, // vertices[7]

{-0.525731, -0.850651, 0}, // vertices[8]

{0, -0.525731, -0.850651}, // vertices[9]

{0, 0.525731, -0.850651}, // vertices[10]

{0, 0.525731, 0.850651} // vertices[11]

};

static const Color3D colors[] = {

{1.0, 0.0, 0.0, 1.0},

{1.0, 0.5, 0.0, 1.0},

{1.0, 1.0, 0.0, 1.0},

{0.5, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.5, 1.0},

{0.0, 1.0, 1.0, 1.0},

{0.0, 0.5, 1.0, 1.0},

{0.0, 0.0, 1.0, 1.0},

{0.5, 0.0, 1.0, 1.0},

{1.0, 0.0, 1.0, 1.0},

{1.0, 0.0, 0.5, 1.0}

};

static const GLubyte icosahedronFaces[] = {

1, 2, 6,

1, 7, 2,

3, 4, 5,

4, 3, 8,

6, 5, 11,

5, 6, 10,

9, 10, 2,

10, 9, 3,

7, 8, 9,

8, 7, 0,

11, 0, 1,

0, 11, 4,

6, 2, 10,

1, 6, 11,

3, 5, 10,

5, 4, 11,

2, 7, 9,

7, 1, 0,

3, 9, 8,

4, 8, 0,

};

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glVertexPointer(3, GL_FLOAT, 0, vertices);

glColorPointer(4, GL_FLOAT, 0, colors);

for (int i = 1; i <= 30; i++)

{

glLoadIdentity();

glTranslatef(0.0f,-1.5,-3.0f * (GLfloat)i);

glRotatef(rot, 1.0, 1.0, 1.0);

glDrawElements(GL_TRIANGLES, 60, GL_UNSIGNED_BYTE, icosahedronFaces);

}

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

static NSTimeInterval lastDrawTime;

if (lastDrawTime)

{

NSTimeInterval timeSinceLastDraw = [NSDate timeIntervalSinceReferenceDate] - lastDrawTime;

rot+=50 * timeSinceLastDraw;

}

lastDrawTime = [NSDate timeIntervalSinceReferenceDate];

}

Posted in: Learn Cocoa

Posted in: Learn Cocoa

Posted in: Microsoft

Posted in: Microsoft

/Developer/Platforms/iPhoneOS.platform/Developer/Library/Xcode/Project Templates/Application/

- (void)drawView:(GLView*)view;

{

Vertex3D vertex1 = Vertex3DMake(0.0, 1.0, -3.0);

Vertex3D vertex2 = Vertex3DMake(1.0, 0.0, -3.0);

Vertex3D vertex3 = Vertex3DMake(-1.0, 0.0, -3.0);

Triangle3D triangle = Triangle3DMake(vertex1, vertex2, vertex3);

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

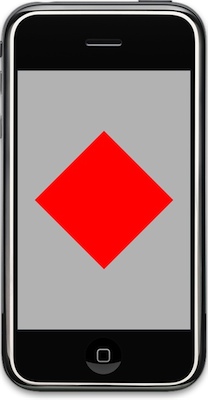

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, &triangle);

glDrawArrays(GL_TRIANGLES, 0, 9);

glDisableClientState(GL_VERTEX_ARRAY);

}

Vertex3D vertex1 = Vertex3DMake(0.0, 1.0, -3.0);

Vertex3D vertex2 = Vertex3DMake(1.0, 0.0, -3.0);

Vertex3D vertex3 = Vertex3DMake(-1.0, 0.0, -3.0); Triangle3D triangle = Triangle3DMake(vertex1, vertex2, vertex3); GLfloat triangle[] = {0.0, 1.0, -3.0, 1.0, 0.0, -3.0, -1.0, 0.0, -3.0}; glLoadIdentity(); glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); glEnableClientState(GL_VERTEX_ARRAY); glColor4f(1.0, 0.0, 0.0, 1.0); glVertexPointer(3, GL_FLOAT, 0, &triangle); glDrawArrays(GL_TRIANGLES, 0, 9); glDisableClientState(GL_VERTEX_ARRAY);- (void)drawView:(GLView*)view;

{

static GLfloat rotation = 0.0;

Vertex3D vertex1 = Vertex3DMake(0.0, 1.0, -3.0);

Vertex3D vertex2 = Vertex3DMake(1.0, 0.0, -3.0);

Vertex3D vertex3 = Vertex3DMake(-1.0, 0.0, -3.0);

Triangle3D triangle = Triangle3DMake(vertex1, vertex2, vertex3);

glLoadIdentity();

glRotatef(rotation, 0.0, 0.0, 1.0);

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, &triangle);

glDrawArrays(GL_TRIANGLES, 0, 9);

glDisableClientState(GL_VERTEX_ARRAY);

rotation+= 0.5;

}- (void)drawView:(GLView*)view;

{

Triangle3D triangle[2];

triangle[0].v1 = Vertex3DMake(0.0, 1.0, -3.0);

triangle[0].v2 = Vertex3DMake(1.0, 0.0, -3.0);

triangle[0].v3 = Vertex3DMake(-1.0, 0.0, -3.0);

triangle[1].v1 = Vertex3DMake(-1.0, 0.0, -3.0);

triangle[1].v2 = Vertex3DMake(1.0, 0.0, -3.0);

triangle[1].v3 = Vertex3DMake(0.0, -1.0, -3.0);

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, &triangle);

glDrawArrays(GL_TRIANGLES, 0, 18);

glDisableClientState(GL_VERTEX_ARRAY);

}

static inline void Vertex3DSet(Vertex3D *vertex, CGFloat inX, CGFloat inY, CGFloat inZ)

{

vertex->x = inX;

vertex->y = inY;

vertex->z = inZ;

}- (void)drawView:(GLView*)view;

{

Triangle3D *triangles = malloc(sizeof(Triangle3D) * 2);

Vertex3DSet(&triangles[0].v1, 0.0, 1.0, -3.0);

Vertex3DSet(&triangles[0].v2, 1.0, 0.0, -3.0);

Vertex3DSet(&triangles[0].v3, -1.0, 0.0, -3.0);

Vertex3DSet(&triangles[1].v1, -1.0, 0.0, -3.0);

Vertex3DSet(&triangles[1].v2, 1.0, 0.0, -3.0);

Vertex3DSet(&triangles[1].v3, 0.0, -1.0, -3.0);

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, triangles);

glDrawArrays(GL_TRIANGLES, 0, 18);

glDisableClientState(GL_VERTEX_ARRAY);

if (triangles != NULL)

free(triangles);

}

- (void)drawView:(GLView*)view;

{

Vertex3D *vertices = malloc(sizeof(Vertex3D) * 4);

Vertex3DSet(&vertices[0], 0.0, 1.0, -3.0);

Vertex3DSet(&vertices[1], 1.0, 0.0, -3.0);

Vertex3DSet(&vertices[2], -1.0, 0.0, -3.0);

Vertex3DSet(&vertices[3], 0.0, -1.0, -3.0);

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, vertices);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 12);

glDisableClientState(GL_VERTEX_ARRAY);

if (vertices != NULL)

free(vertices);

}glEnableClientState(GL_COLOR_ARRAY);

- (void)drawView:(GLView*)view;

{

Vertex3D vertex1 = Vertex3DMake(0.0, 1.0, -3.0);

Vertex3D vertex2 = Vertex3DMake(1.0, 0.0, -3.0);

Vertex3D vertex3 = Vertex3DMake(-1.0, 0.0, -3.0);

Triangle3D triangle = Triangle3DMake(vertex1, vertex2, vertex3);

Color3D *colors = malloc(sizeof(Color3D) * 3);

Color3DSet(&colors[0], 1.0, 0.0, 0.0, 1.0);

Color3DSet(&colors[1], 0.0, 1.0, 0.0, 1.0);

Color3DSet(&colors[2], 0.0, 0.0, 1.0, 1.0);

glLoadIdentity();

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, &triangle);

glColorPointer(4, GL_FLOAT, 0, colors);

glDrawArrays(GL_TRIANGLES, 0, 9);

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

if (colors != NULL)

free(colors);

}

- (void)drawView:(GLView*)view;

{

static GLfloat rot = 0.0;

// This is the same result as using Vertex3D, just faster to type and

// can be made const this way

static const Vertex3D vertices[]= {

{0, -0.525731, 0.850651}, // vertices[0]

{0.850651, 0, 0.525731}, // vertices[1]

{0.850651, 0, -0.525731}, // vertices[2]

{-0.850651, 0, -0.525731}, // vertices[3]

{-0.850651, 0, 0.525731}, // vertices[4]

{-0.525731, 0.850651, 0}, // vertices[5]

{0.525731, 0.850651, 0}, // vertices[6]

{0.525731, -0.850651, 0}, // vertices[7]

{-0.525731, -0.850651, 0}, // vertices[8]

{0, -0.525731, -0.850651}, // vertices[9]

{0, 0.525731, -0.850651}, // vertices[10]

{0, 0.525731, 0.850651} // vertices[11]

};

static const Color3D colors[] = {

{1.0, 0.0, 0.0, 1.0},

{1.0, 0.5, 0.0, 1.0},

{1.0, 1.0, 0.0, 1.0},

{0.5, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.5, 1.0},

{0.0, 1.0, 1.0, 1.0},

{0.0, 0.5, 1.0, 1.0},

{0.0, 0.0, 1.0, 1.0},

{0.5, 0.0, 1.0, 1.0},

{1.0, 0.0, 1.0, 1.0},

{1.0, 0.0, 0.5, 1.0}

};

static const GLubyte icosahedronFaces[] = {

1, 2, 6,

1, 7, 2,

3, 4, 5,

4, 3, 8,

6, 5, 11,

5, 6, 10,

9, 10, 2,

10, 9, 3,

7, 8, 9,

8, 7, 0,

11, 0, 1,

0, 11, 4,

6, 2, 10,

1, 6, 11,

3, 5, 10,

5, 4, 11,

2, 7, 9,

7, 1, 0,

3, 9, 8,

4, 8, 0,

};

glLoadIdentity();

glTranslatef(0.0f,0.0f,-3.0f);

glRotatef(rot,1.0f,1.0f,1.0f);

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glVertexPointer(3, GL_FLOAT, 0, vertices);

glColorPointer(4, GL_FLOAT, 0, colors);

glDrawElements(GL_TRIANGLES, 60, GL_UNSIGNED_BYTE, icosahedronFaces);

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

static NSTimeInterval lastDrawTime;

if (lastDrawTime)

{

NSTimeInterval timeSinceLastDraw = [NSDate timeIntervalSinceReferenceDate] - lastDrawTime;

rot+=50 * timeSinceLastDraw;

}

lastDrawTime = [NSDate timeIntervalSinceReferenceDate];

}

static GLfloat rot = 0.0; static const Vertex3D vertices[]= {

{0, -0.525731, 0.850651}, // vertices[0]

{0.850651, 0, 0.525731}, // vertices[1]

{0.850651, 0, -0.525731}, // vertices[2]

{-0.850651, 0, -0.525731}, // vertices[3]

{-0.850651, 0, 0.525731}, // vertices[4]

{-0.525731, 0.850651, 0}, // vertices[5]

{0.525731, 0.850651, 0}, // vertices[6]

{0.525731, -0.850651, 0}, // vertices[7]

{-0.525731, -0.850651, 0}, // vertices[8]

{0, -0.525731, -0.850651}, // vertices[9]

{0, 0.525731, -0.850651}, // vertices[10]

{0, 0.525731, 0.850651} // vertices[11]

}; static const Color3D colors[] = {

{1.0, 0.0, 0.0, 1.0},

{1.0, 0.5, 0.0, 1.0},

{1.0, 1.0, 0.0, 1.0},

{0.5, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.0, 1.0},

{0.0, 1.0, 0.5, 1.0},

{0.0, 1.0, 1.0, 1.0},

{0.0, 0.5, 1.0, 1.0},

{0.0, 0.0, 1.0, 1.0},

{0.5, 0.0, 1.0, 1.0},

{1.0, 0.0, 1.0, 1.0},

{1.0, 0.0, 0.5, 1.0}

}; static const GLubyte icosahedronFaces[] = {

1, 2, 6,

1, 7, 2,

3, 4, 5,

4, 3, 8,

6, 5, 11,

5, 6, 10,

9, 10, 2,

10, 9, 3,

7, 8, 9,

8, 7, 0,

11, 0, 1,

0, 11, 4,

6, 2, 10,

1, 6, 11,

3, 5, 10,

5, 4, 11,

2, 7, 9,

7, 1, 0,

3, 9, 8,

4, 8, 0,

}; glLoadIdentity();

glTranslatef(0.0f,0.0f,-3.0f);

glRotatef(rot,1.0f,1.0f,1.0f);

glClearColor(0.7, 0.7, 0.7, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glColor4f(1.0, 0.0, 0.0, 1.0);

glVertexPointer(3, GL_FLOAT, 0, vertices);

glColorPointer(4, GL_FLOAT, 0, colors); glDrawElements(GL_TRIANGLES, 60, GL_UNSIGNED_BYTE, icosahedronFaces); glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

static NSTimeInterval lastDrawTime;

if (lastDrawTime)

{

NSTimeInterval timeSinceLastDraw = [NSDate timeIntervalSinceReferenceDate] - lastDrawTime;

rot+=50 * timeSinceLastDraw;

}

lastDrawTime = [NSDate timeIntervalSinceReferenceDate];