If you've gotten through the first few chapters of More iPhone 3 Development, you might be wondering why we included a sectionNameKeyPath when we didn't actually divide up the table into sections. What's the point of having the fetched results controller use sections if they're not displayed?

The truth of the matter is that we originally planned to take the SuperDB application further than we were able to. Unfortunately, we reached a point where we had to cut it off and move on to other topics in order to both meet our deadline and to come in at a reasonable page count (as it was, we came in 250 pages over what we contracted for). Okay, we didn't actually meet our deadline, but we would have missed it by more.

Dave and I agreed to stop working on Core Data to get the book done and leave room for the other topics, but we left open the possibility of expanding the application further here in my blog. In order to be able to do that, we left in some vestiges of the original plan to make it easier to expand the application here.

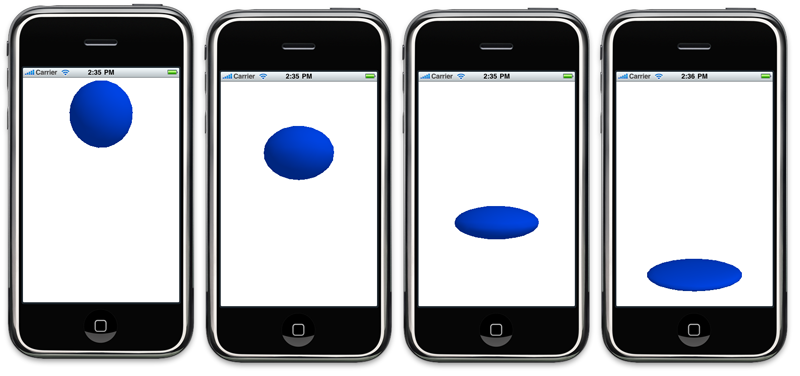

Here's the first expansion, which is to add alphabetical sections to the table, like so:

Let's continue on from the code in the 07 - SuperDB project. You can download the revised version from here. Make a new copy of it if you wish. The first thing we need to do is add a tableview delegate method that returns the title to be displayed in each section. To do that, add the following code to HeroListViewController.m, near the other table view methods:

This is a very generic method that will use the values from the fetched results controller. This method becomes basically a copy-and-paste bit of code that you can use unchanged in any controller that uses a fetched results controller with sections.

If you run your application now, however, you're going to get a separate section for each row (it would also crash, but we'll deal with that as well). In the version you've got now, we specified either name or secretIdentity as our sectionNameKeyPath, so every unique name or secret identity becomes its own section. Generally, not what want. So, the next step is to add virtual accessor method to our Hero object to return the value that we want to use to use to divide the list of heroes up. Let's do it alphabetically, so that means we need to create methods to return the first letter of the name and the secret identity. We can then use these new virtual accessor methods as our section name key paths, and the fetched results controller will divvy up the list by first letter.

In Hero.h, add the following two method declarations, just before the @end keyword:

Save Hero.h and switch over to Hero.m, and insert the implementation of these two methods, right above the @end keyword again:

Save Hero.m. Next, we have to make a few changes in HeroListViewController.m. First, change the assignment of sectionKey to reflect our new virtual accessor methods. Look for the following code in the fetchedResultsController method and change the lines in bold to switch from using name and secretIdentity to using our new first-letter methods:

That's basically it. Well, no really. In testing this, I found that it crashed. For this new version, we need to tweak the fetched results controller delegate methods that we gave you in Chapter 2. Replace your existing implementations of controller:didChangeSection:atIndex:forChangeType: and controller:didChangeObject:atIndexPath:forChangeType:newIndexPath: with the following new versions:

Since our original application gave every row its own section, we didn't have this problem, but what happens is when we receive notice that a row has moved, we need to make sure that the number of sections in the table and controller match. It's possible that a row moved to a new section, possibly even causing a row to be deleted or inserted in the controller, but not the table. If a row is moved from one section to another, and we don't account for it, the app will crash. If the moved row had been the last row in a section, we need to delete the section from the table, unless it was the last section, because tables have to have at least one section. If moving the row created a new section in the controller, we insert a new row into the table.

And that's really it, you now have your SuperDB's hero list divide up by first letter of either the hero's name or secret identity, depending on which tab was selected.

You should use the fetched results controller delegate methods from this posting instead of the ones in the book. The ones in the book work just fine for the application's in the book, but this one is more flexible and will handle a greater variety of situations correctly.

The truth of the matter is that we originally planned to take the SuperDB application further than we were able to. Unfortunately, we reached a point where we had to cut it off and move on to other topics in order to both meet our deadline and to come in at a reasonable page count (as it was, we came in 250 pages over what we contracted for). Okay, we didn't actually meet our deadline, but we would have missed it by more.

Dave and I agreed to stop working on Core Data to get the book done and leave room for the other topics, but we left open the possibility of expanding the application further here in my blog. In order to be able to do that, we left in some vestiges of the original plan to make it easier to expand the application here.

Here's the first expansion, which is to add alphabetical sections to the table, like so:

Let's continue on from the code in the 07 - SuperDB project. You can download the revised version from here. Make a new copy of it if you wish. The first thing we need to do is add a tableview delegate method that returns the title to be displayed in each section. To do that, add the following code to HeroListViewController.m, near the other table view methods:

- (NSString *)tableView:(UITableView *)tableView

titleForHeaderInSection:(NSInteger)section This is a very generic method that will use the values from the fetched results controller. This method becomes basically a copy-and-paste bit of code that you can use unchanged in any controller that uses a fetched results controller with sections.

If you run your application now, however, you're going to get a separate section for each row (it would also crash, but we'll deal with that as well). In the version you've got now, we specified either name or secretIdentity as our sectionNameKeyPath, so every unique name or secret identity becomes its own section. Generally, not what want. So, the next step is to add virtual accessor method to our Hero object to return the value that we want to use to use to divide the list of heroes up. Let's do it alphabetically, so that means we need to create methods to return the first letter of the name and the secret identity. We can then use these new virtual accessor methods as our section name key paths, and the fetched results controller will divvy up the list by first letter.

In Hero.h, add the following two method declarations, just before the @end keyword:

- (NSString *)nameFirstLetter;

- (NSString *)secretIdentityFirstLetter;Save Hero.h and switch over to Hero.m, and insert the implementation of these two methods, right above the @end keyword again:

- (NSString *)nameFirstLetter

- (NSString *)secretIdentityFirstLetter Save Hero.m. Next, we have to make a few changes in HeroListViewController.m. First, change the assignment of sectionKey to reflect our new virtual accessor methods. Look for the following code in the fetchedResultsController method and change the lines in bold to switch from using name and secretIdentity to using our new first-letter methods:

...

case kByName:

case kBySecretIdentity:

...

That's basically it. Well, no really. In testing this, I found that it crashed. For this new version, we need to tweak the fetched results controller delegate methods that we gave you in Chapter 2. Replace your existing implementations of controller:didChangeSection:atIndex:forChangeType: and controller:didChangeObject:atIndexPath:forChangeType:newIndexPath: with the following new versions:

- (void)controller:(NSFetchedResultsController *)controller didChangeObject:(id)anObject atIndexPath:(NSIndexPath *)indexPath forChangeType:(NSFetchedResultsChangeType)type newIndexPath:(NSIndexPath *)newIndexPath

- (void)controller:(NSFetchedResultsController *)controller didChangeSection:()sectionInfo atIndex:(NSUInteger)sectionIndex forChangeType:(NSFetchedResultsChangeType)type Since our original application gave every row its own section, we didn't have this problem, but what happens is when we receive notice that a row has moved, we need to make sure that the number of sections in the table and controller match. It's possible that a row moved to a new section, possibly even causing a row to be deleted or inserted in the controller, but not the table. If a row is moved from one section to another, and we don't account for it, the app will crash. If the moved row had been the last row in a section, we need to delete the section from the table, unless it was the last section, because tables have to have at least one section. If moving the row created a new section in the controller, we insert a new row into the table.

And that's really it, you now have your SuperDB's hero list divide up by first letter of either the hero's name or secret identity, depending on which tab was selected.

You should use the fetched results controller delegate methods from this posting instead of the ones in the book. The ones in the book work just fine for the application's in the book, but this one is more flexible and will handle a greater variety of situations correctly.

2:59 PM

2:59 PM

Unknown

Unknown

Posted in:

Posted in: