I am on vacation.

I did not intend to do a blog post while on vacation, but I feel like I need more than 140 characters to explain my recent twitter rants. We're having a quiet night after several days in the Disney parks, so it's a good opportunity to expand on these recent tweets, since the twitter versions, limited to 140 characters, are evoking a lot of anger.

The Spark

While at Disney World's Magic Kingdom, I wanted to look something up on Disney's website. Navigating to one of the Disney.com web pages using my iPhone resulted in an error page. That's right, I thought to myself, Disney uses Flash for almost everything, don't they.

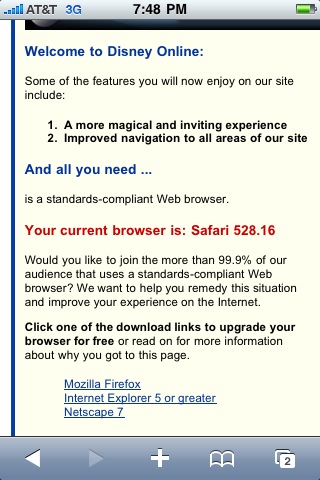

I'm not thrilled about a company doing that, but that's absolutely not the thing that set me off. It was the fact that Disney's explanation for why I couldn't view their page was that I wasn't using a "Standards compliant browser".

Now, say what you will about Mobile Safari, but one accusation you can't reasonably level at it is that it isn't Standards-compliant. It's based on WebKit, and WebKit fully passes the Acid3 test. It's as standards-compliant as any browser, certainly any mobile browser. It's a hell of a lot more "standards compliant" than IE5 (look at the screenshot).

Disney is, basically, putting the blame on the user for their decision to require Flash on their website, and using made-up statistics to make the user sound like an anomaly. A weirdo. C'mon, be cool like 99.9% of our viewers! Yet, they couch it in language that SOUNDS like good customer service (we want to help). Crikey!

Out of Place

Now, I find this odd. For all of their faults, Disney tends to be incredibly good at not insulting their customers. Some of the best customer service I've ever seen has been within the 47 square miles owned by the Disney corporation in Central Florida. Seriously. I'm not exaggerating here at all. Disney castmembers bend over backwards to accommodate their customers and the company has been incredibly progressive over the last thirty years or so in their treatment of customers. When they do screw up, they're usually quick to apologize and do everything possible to rectify the situation.

I had a situation with park security several years back. The details aren't important, but I was accused of doing something illegal (in front of my children and many, many strangers) in a situation where it would have been physically impossible for me (or anyone) to have done the thing I was accused of. The situation was rectified quickly once I asked for management involvement, and I was sent on my way with a sincere-sounding apology. When I got home from vacation, I received a box of gifts for my children with a hand-written apology from a VP. A few days later, I received another apology by phone and was given specific information about changes that would be made to their training to make sure that same situation didn't happen again.

That relatively minor situation, if handled wrong, could easily have ended with me never wanting to do business with Disney again. But, it didn't, because they handled it right. Whether any of the apologies were sincere or not makes absolutely no difference. They acknowledged that they had made a mistake, apologized to me for making it, and then followed up afterwards to see if I needed anything. It wouldn't be reasonable to ask for or expect more than that. And to their benefit, Disney Management did not blame or scapegoat the castmember, either. They recognized it was their responsibility to train their castmembers for foreseeable encounters, and that they had failed.

As a result, I have been back to Disney World several times since then. I've never had another serious situation myself, but I've become acutely aware of how this company handles customer service, and often notice little details about the way the Disney castmembers treat people that probably go unnoticed by most people visiting the parks and hotels. With only rare exceptions, Disney does a phenomenal job interacting with their customers.

Except online.

When it comes to the online world, they seem to act with a much more typical corporate attitude, one that, if they were a person, would probably be labeled "arrogance".

So, anyway, my inability to look up something on Disney's website from my iPhone while standing in a Disney park led to my recent twitter-rant about Flash. It was a somewhat adolescent but very cathartic outburst that can basically be summed up as

- Flash sucks

- Flash is not a standard, and

- Flash sucks

Oddly enough, these comments seemed to hit a nerve with a lot of people. I probably should have expected that - one of the more prevalent backgrounds in the iPhone developer community is that of Flash/Flex developer, but I really didn't expect people to take it quite as personally as they did. The only other place I've seen people take criticism of a language or development environment so personally is when I've dared to criticize .Net or, before .Net existed, Visual Basic.

The next twenty-four hours or so after my twitter-rant saw a fair number of replies, including several ad hominem attacks and many factually incorrect assertions about Flash being a standard, along with a lot of "the iPhone is FAIL cuz it don't support Flash" kinds of statements.

Let me just clarify my comments a bit and explain them in more rational terms here.

Flash is Dead

I hate to break it to you, but Flash, as it currently exists, is dead. Oh, it's not going to die quickly, it's going to die a slow painful death precisely because there has been such a large investment of time and money into using it by so many large corporations like Disney. Flash's roots run way too deep for it to disappear quickly.

Here's the thing, though: Flash is a product of a different generation of computing. It's a product of a world where 90% of the people used one platform, and the bulk of the remaining used another. There was Windows, and there was the Mac. And then Linux gained some popularity and became a viable platform, yet for a long time, Linux users couldn't access Flash web sites. Eventually, Linux got Flash too.

But Adobe never lavished the kind of love on the Linux or Mac versions of the Flash plug-in that they did on the Windows version, and less-popular options were SOL because Flash is a proprietary platform.

And now, the world is changing. People are increasingly browsing the web from mobile devices, and unlike the computer world of a decade ago, the mobile computing landscape is not anything like a monoculture or monopoly. There are several viable mobile platforms all competing in that space. We have the iPhone, Blackberry, Palm Pre, Windows Mobile, Android, Symbian and probably others that have slipped my mind. All of these are operating systems currently shipping on phones and all come with browsers. None of them, except a solitary model of Android phone, has Flash.

Do you think Adobe is hard at work writing Flash virtual machines for every possible configuration of hardware and software that exists in the mobile space. Hell, no! They're not even willing to fix the massive memory leaks in the OS X Flash plugin. No, they're going to wait for one or two clear leaders to emerge and then, if they can, and if their MBAs decide there's a sufficient ROI in doing so, then they'll develop Flash for those platforms.

If no clear victors emerge, who knows what we'll see. It's unlikely we'll ever see Flash on the iPhone

1. Flash on the Hero is painfully slow and clunky. And, Adobe is also unlikely to ever devote the resources necessary to fully develop and maintain versions for even the platforms they do decide to support. They're going to do the least they can do to say that Flash is part of the mobile web, and that's it.

What I mean by Sucks

When I say that Flash sucks, I'm not talking about ActionScript or the developer tools or anything of that nature. I'm not a Flash developer and never have been. Developing for Flash might be better than receiving oral sex for all I know. When I say that Flash sucks, I'm specifically referring to the leaky, crash-prone implementation of Flash available for Mac OS X. I'm selfish; I rant about things that affect me. And Flash definitely affects me. I'm talking about something that is the polar opposite of a "thin-client" - Flash is a client that can suck up 60% of processor cycles on a high-end machines to run a recreation a a 40-year old arcade game. I'm talking about a technology that is more likely to bring up the SBBD than any other piece of Mac software. Flash on Windows is tolerable (barely), but even on a fast Mac, it can be a horrible, horrible experience to have even a single Flash item on a web page. Every Safari crash I've had in recent memory was directly caused by the Flash plug-in.

I'm absolutely not saying that Flash developers are bad people. I'm absolutely not saying they're dumb. I had no intention of saying a single thing about Flash developers at all. My intentions was just to point out one of the problems of treating Flash as if it were a "de facto Standard" and using it as a general-purpose web-development tool. Flash is controlled by a single-company. It's got the same Achille's Heel as every other proprietary solution (including several Apple technologies like Quicktime, iTunes, and the iPhone SDK). For web development, that was sort of okay when there were only three operating systems to write for and one of them had most of the market share.

I'm not even saying proprietary is always bad. I love my proprietary iPhone and Mac, and you can pry them from my cold dead fingers. But, I wouldn't ever advocate only supporting the iPhone or the Mac on your web site simply because I love them. The whole point of the web is that it's platform agnostic (or, if you buy into Google's point of view, it IS the platform). When you put something on the web, it should be readable by any device that can get on the internet. Your site should use open standards so that the developers for platforms that can't already view your content have the ability to implement that functionality for their users simply by referring to the standard. You should only use a proprietary option like Flash when you have a compelling reason to do so. Implementing a drop-down menu in your navigation bar is NEVER a compelling reason. If something can be done with Javacript and HTML, you'd better have a damn good solid reason for doing it in Flash (or any other proprietary solution, for that matter) rather than using standards-compliant tools, and that reason had better not be "it's what our developer knows/likes" or "it was convenient".

Degrade Gracefully

Someone responded to my Twitter rant by

pointing out the crux of the original problem: when you put something on the web, it should at very least degrade gracefully. This is just common sense. If you detect that a browser can't support some feature you use, don't assume it's because your user is running old software. It's just as likely that they're running newer software that wasn't around when you wrote your detection algorithm. Don't make any assumptions or implications in your error page. Just describe, in as much detail as possible, what the problem is and apologize for the inconvenience. That's all. You don't need to explain or defend your choice. Treat your virtual customers the same way you would treat real ones in person. If you've made a decision that will inconvenience some of your customers, man up and live with it, don't try to place the blame back on your user for your fracking decision.

The Future of Flash

I understand what causes some of these attacks I've received. I really do: panic. When people point out to a Flash developer the fact that Flash is not well-positioned for the future, increasingly mobile Web, Flash developers feel a rush of panic. They go into defensive mode. They want those statements to be wrong. They don't like the fact that something they like and have invested a lot of time and energy into might be obsolete in a few short years. This isn't anything unique to Flash. I've seen it before many times. Some people manage to hang on by finding niche work (hell, I know full-time Cobol developers), others (sometimes grudgingly) move to other technologies, while others, like the many NextSTEP developers out there who got a second chance with Mac OS X, and a third chance with the iPhone, get a reprieve.

Hell, I never thought I'd find a way to code in Objective-C for a living. It was a dying language when I started learning it. I didn't learn it because it would make me money

2, I learned it because I saw something in it that I thought was right. I learned it because I wanted to be able to write code that was as good as the code that I saw from the NeXT developers.

What will happen with Flash? Hell if I know. My current level of confidence in Adobe is not very high. The management team there has somehow managed to take a customer base who were rabidly loyal and turn them into customers who feel trapped and desperately want an alternative. This has happened in less than a decade. Talk about spending political capital! Somewhere along the line, Adobe stopped being a company that did, first and foremost, what their customers needed, and instead became a company that looked to make the most money they could with the least expenditure. It's a short-term strategy taught in many business schools (including Harvard) using impressive-sounding phrases like "maximizing shareholder value". Yet, it's a strategy that anyone with any common sense (aka not an MBA) knows is completely and utterly moronic. In the long-term, rabidly loyal fans are far better than great salespeople. They're better than good advertising campaigns, slogans, or even Superbowl ads. They're better than product placement in a summer blockbuster.

And you can't buy them for any price.

Any management team that can do what Adobe's has done in the past ten years truly deserves to die. Frankly, I wouldn't bet on them doing the right thing in any particular situation, including this one.

But, that doesn't mean there's no hope. There are many ways that Adobe could save Flash/Flex for the mobile world. One way would be to create something like Google's

GWT - an environment where some or all of the code gets translated into HTML and Javascript to be run on the client, leaving to a VM only those tasks that can't reasonably be handled that way.

With the determination to do it, and the willingness to recognize that the world has, indeed, changed, Adobe could future-proof Flash/Flex code. It would be a hell of a first step back to having rabidly loyal fans. As an aside, a Carbon-free, 64-bit clean, GCD-enabled Photoshop would be another big step in that direction.

Learning is Cool

But, even if Adobe continues to be Adobe, all is not lost. You may like a lot of things about Flash/Flex and ActionScript, but learning a new language and new frameworks is very possible. In fact, it's a lot of fun. It's an adventure. The really hard, brain-bending stuff is the conceptual stuff, much of which you've already got worked out from learning to develop in ActionScript. Heck, don't even wait for Flash to die! Cross-training is good for developers, and you should look forward to an opportunity to see how different languages and frameworks have solved the same problems. You'd be surprised at how much you can use from other languages when writing code.

And if you face the prospect of learning a new language with more than a little trepidation, maybe software development is not the right line of work for you. And I mean that very seriously.

1 - If Adobe manages to come up with a 64-bit clean, GCD-enabled Photoshop, then all bets are off.

2 - A joke from a few years back that I heard from Mike Lee jumps to mind here. What's the difference between a Cocoa developer and a large pizza? A large pizza can still feed a family of four.

Posted in: Rant

Posted in: Rant Posted in: Rant

Posted in: Rant

Posted in: Rant

Posted in: Rant

Posted in: Other blogs

Posted in: Other blogs Posted in: iPhone Apps

Posted in: iPhone Apps